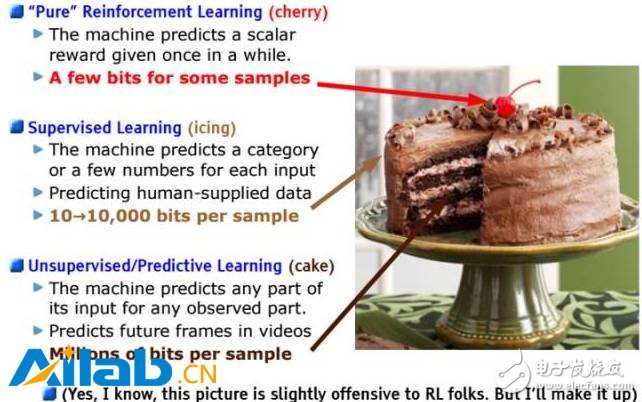

Yann LeCun repeatedly mentioned a famous "cake" metaphor in many speeches:

If artificial intelligence is a piece of cake, then Reinforcement Learning is a cherry on the cake, Supervised Learning is a layer of frosting outside, and Unsupervised Learning is a cake embryo.

At the moment we only know how to make icing and cherries, but we don't know how to make cake embryos.

By the time NIPS 2016 in Barcelona in early December, LeCun began using the new word "PredicTIve Learning" to replace the "unsupervised learning" of the cake embryo.

LeCun said in his speech:

One of the key factors we have been missing is predictive (or unsupervised) learning, which refers to the ability of machines to model the real environment, predict possible futures, and understand how the world works through observation and demonstration.

This is an interesting subtle change that hints at LeCun's perception of the "cake". The view is that there is a lot of basic work to be done before accelerating the development of AI. In other words, building up the current supervised learning by adding more capabilities (such as memory, knowledge base, and agents) means that there is still a lot of long before we can build that “predictive base levelâ€. The hard road is going.

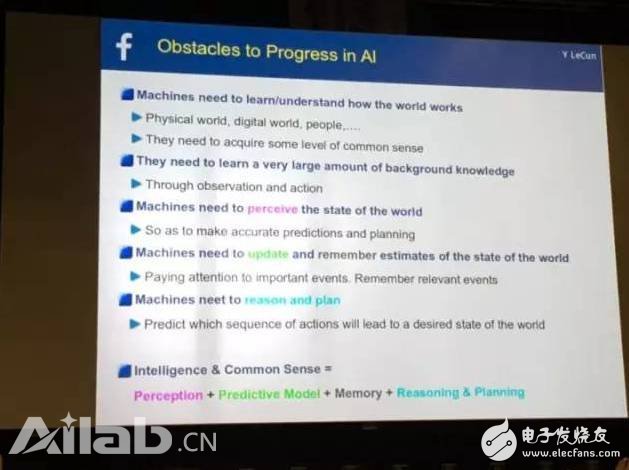

In his latest NIPS 2016 presentation, LeCun released a PPT that listed the obstacles in the development of AI:

Machines need to learn/understand how the world works (including the physical world, the digital world, people, etc., to gain a certain level of common sense)

The machine needs to learn a lot of background knowledge (by observation and action)

The machine needs to observe the state of the world (to make accurate predictions and plans)

The machine needs to update and remember the estimation of the state of the world (focus on major events, remember related events)

Machines need reasoning and planning (predicting which behaviors will eventually lead to an ideal world state)

Predictive learning clearly requires that it be able to learn not only without supervision, but also to learn a model for predicting the world. LeCun is trying to change the reason we have an inherent classification of AI, perhaps indicating that AI has a lot of hard work to go from the final goal.

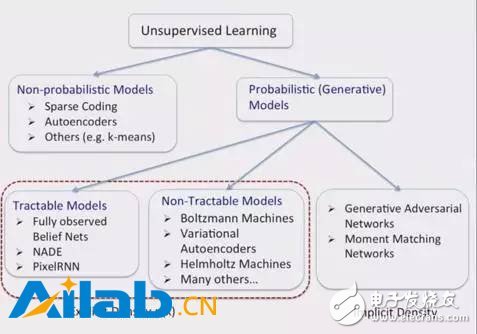

Professor Ruslan Salakhudinov, who was recently employed by Apple, gave a speech on unsupervised learning. In the bottom right corner of the PPT he demonstrated, he mentioned the “Generation Against Networks†(GANs).

The GANs consist of competing neural networks: generators and discriminators, the former attempting to produce false images and the latter identifying real images.

An interesting feature of the GANs system is that a closed shape loss function is not required. In fact, some systems are able to find their own loss function, which is very surprising. But one of the shortcomings of the GANs network is that it is difficult to train. In this case, we need to find a Nash equilibrium for a non-cooperative two-party game.

Lecun said in a recent speech on unsupervised learning that the adversarial network is "the coolest idea in machine learning in 20 years."

OpenAI, a non-profit research organization funded by Elon Musk, has a special preference for generating models. Their motivation can be summed up as Richard Feynman's famous saying "I don't create, I can not understand". Feynman here actually refers to the "First Principles" thinking method: understanding things by constructing validated concepts.

In the AI ​​world, perhaps it means that if a machine can generate a highly realistic model (which is a big leap), then it develops an understanding of the predictive model. This is exactly the approach taken by GANs.

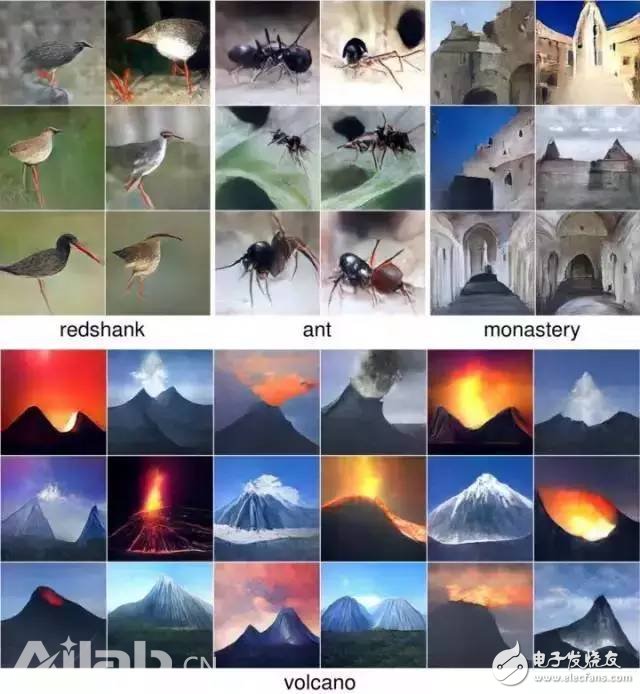

These images are generated by the GANs system based on the given vocabulary. For example, if a given vocabulary has "red ankles", "ant", "monastery" and "volcano", the following image is generated.

These generated images are amazing, and I think many humans will not paint so well.

Of course, this system is not perfect, for example, the following images are messed up. However, I have seen many people painting more than this when playing the "painting guessing" game.

The current consensus is that these generation models do not accurately capture the "semantics" of a given task: they do not understand the meaning of the words "ant", "red foot", "volcano", but they are good. Imitation and prediction. These images are not the re-creation of the machine based on the original training picture library, but rather the results that are very close to reality inferred from the Generalized Model.

This method of using a confrontational network is different from the classical machine learning method. We have two competing neural networks, but it seems to work together to achieve a "generalization ability" (GeneralizaTIon).

In the classic machine learning world, researchers first define an objective function and then use his favorite optimization algorithm. But there is one problem, that is, we can't know exactly whether the target function is correct. The surprising thing about GANs is that they can even learn their own objective function!

One fascinating finding here is that the deep learning system is extremely malleable. Classical machine learning considers that the objective function and constraints are fixed concepts, or that the optimal algorithm is a fixed concept, and this is not applicable to the field of machine learning. What's even more surprising is that even the Meta-Level method can be used, that is, the deep learning system can learn how to learn.

Breakout Cable Assembly,Fiber Optic Trunk Cable,Cable Assembly,Breakout Cable Assembly Adaptor

Huizhou Fibercan Industrial Co.Ltd , https://www.fibercannetworks.com