Abstract: In this paper, through the research and analysis of the subtitle data format of the ETS 300 743 specification in the DVB standard, combined with the demultiplexing filtering, layer processing and user interface module of the set-top box platform, this paper gives a correct, complete and timely Subtitle display implementation.

1 Introduction

With the vigorous development of digital TV, digital TV has gradually entered thousands of households. In addition to traditional TV programs, the use of advanced digital TV technology to provide more information services to the majority of users is an inevitable trend of the development of radio and television. Subtitle (subTItle) as a simple and intuitive way to provide information, its importance is mainly reflected in two aspects. One is that subtitles can provide another "voice" information channel for people with hearing impairments; second, the subtitles function can provide a convenient platform for the global promotion of TV programs through simple post-production (such as multilingual display). As the most widely used digital TV transmission standard in the world, DVB also provides corresponding specifications for subtitles in multiple languages, thus making subtitles a good carrier for TV program exchange in different countries and regions.

2 DVB Digital TV Subtitle Specification

2.1 Caption control information specification

The specification of control information mainly includes the loading of valid data and the storage of extracted index information. DVB stipulates that the subtitle information should be multiplexed into the elementary stream of the program in the form of a private data package of the program, similar to the loading format of audio and video data. Index information is extracted into the private data segment of the program map table (PMT) using the descriptor syntax of DVB.

The private data segment with the stream type of 0 & TImes; 06 carries the relevant information extracted from the private data of this program: the PID of the private data packet and its descriptor. The tag value (descriptor_tag) of the subtitle descriptor is 0 & TImes; 59, and the syntax is as follows:

Analyze the subtitle descriptor to get the language code (ISO639_language_code), subtitle type, synthesis page and optional auxiliary page of the subtitle. This information will be used as index information for data extraction in the extraction of caption data.

2.2 Caption data encoding specifications

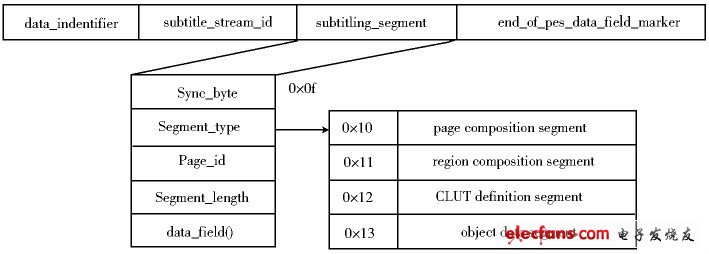

The subtitles are displayed on the terminal in the form of pages. Each page is divided into multiple areas, and each area is associated with multiple graphic objects and the color of the area. Therefore, caption data encoding is defined according to these requirements. The caption data is carried in the payload of the PES packet, and the structure is shown in Figure 1.

Figure 1 Data structure of subtitle data

By analyzing the data structure of the subtitles, it can be seen that the first two bytes are the determination information of the subtitle data, including a data definition byte (this field defines the data stream as DVB subtitles, its value is 0 & TImes; 20) and a byte of subtitle stream Identify the id (its value is 0 × 00); the last byte is the end of caption data (its value is 0 × ff). The data filled in the middle is the subtitle segment data. The first 6 bytes in the subtitle segment data are the header information of the subtitle segment, including 1 sync byte (its value is 0 × 0f) and 1 type byte (used to determine what is carried in data_field () Type of data segment), a 2-byte page ID (used to uniquely identify a subtitle segment), and a 2-byte segment length identifier (to identify the size of the payload carried behind it).

There are four main types of subtitle segments:

Page composition. The page id (page_id) defines the display end time of the page, the status of the page, the number of areas in the page, the number of each area, the horizontal and vertical positions of each area.

Region composition. It is used to define the width, height, horizontal and vertical position of the area, the CLUT_id value of the CLUT table used, the id of the object, the background color of the area, and the pixel depth.

CLUT definition. Used to define colors in order to convert the transmitted virtual colors to the colors in the actual color palette.

Object data segmentation (object data). Used to define the encoding method and encoding data of the object. Coding methods include pixel coding and character coding. Each object can be regarded as a displayable image unit.

The complete display of each page of data requires at least these four data segments, so when parsing the subtitle stream, it is necessary to use various structures and linked lists to parse and store these data segments.

3 Set-top box subtitle decoding display system design

The realization of subtitle receiving and displaying on STB mainly includes four major modules: data extraction module, data decoding module, layer display module and user control module. The relationship of each module is shown in Figure 2.

In the figure, the user control module is used to respond to user buttons and send messages to control other modules; the data extraction module starts, stops, or extracts subtitle data and other control messages according to the filter sent by the reception control module, and completes the data extraction; The decoding module is responsible for decoding the original subtitle data sent by the data extraction module, and sending the decoded data to the designated buffer for the layer display module to call; the layer display module is used to realize various OSD display of the subtitle interface operating.

Figure 2 Relationship diagram of subtitle system modules.

Guangzhou Yunge Tianhong Electronic Technology Co., Ltd , http://www.e-cigaretteyfactory.com