(Original title: Depth | Let AI learn to think, it may be necessary to teach it to paint first)

In April of this year, Google introduced the AutoDraw drawing function, which allowed AI to help humans draw and create artist-level line drawings in just a few strokes. This interesting AI application has excited the industry. Although the AI's strokes are somewhat immature from the results of the current outflow, this does not prevent Google from making high-profile popular science among the public behind its AI system, such as publishing some popular science papers. The AI ​​system behind it is called destroy ketchRNN, which is part of Google's new 燤agenta project to test whether AI can be used for art.

To better understand this project and the story behind it, theatlantic Atlantic Magazine interviewed Doug Eck, the head of the Magenta project. Lei Feng Network (public number: Lei Feng Network) compiled the contents of the interview.

Eck is a professor at the University of Montreal (regarded as a hotbed of artificial intelligence) and also works for Google. He was previously responsible for Google Music and is currently working from Google Brian. After earning a bachelor's degree in computer science from Indiana University in 2000, Eck has extensive experience in music and machine learning.

Regarding the SketchRNN AI system, if you want to understand it more vividly, you can see from the following three paintings:

When humans are asked to draw a pig and a truck, it may be this style:

However, when asked to draw a "pig," you may visually mix the salient features of the two and draw it as such.

Although the brush still looks very immature, this mixed product actually resembles the output of the artificial intelligence system SketchRNN. As Eck and his Google collaborator David Ha introduced, SketchRNN's working principle can be understood as "summarizing abstract concepts in a human-like manner."

The previous example shows that Google does not want to create a "pig" machine, but the machine created by it can identify and outline the "pig" concept or feature. In a nutshell, when human beings draw an object, they will store the concept and salient features of this object in the brain and make connections between "how to draw" and "storage features". The meaning of SketchRNN is to let the machine learn this "comprehensive ability" of human beings.

To this end, Google has created a game called "Quick, Draw!". Like the way humans play, Google has produced a large number of artificial graphics databases for the game. Training data includes 75 items, such as owls, mosquitoes, gardens, or axes, each containing at least 70,000 individual examples. Based on the drawing data obtained by "Quick, Draw!", Google developed SketchRNN's AI system.

When human beings are sketching, the colorful and noisy world can only be compressed in several lines of the pencil. These simple strokes are SketchRNN's datasets. Each type of object painting, such as cats, yoga poses, and rain, can use Google's TensorFlow open source platform software library to train a particular type of neural network. When the machine renders a picture in the style of Van Gogh or the original Deep Dream, humans always feel a bit weird, because the machine's concept or distinctive features of the object cannot be merged as flexible or traceless.

These projects can experience humans in a mysterious and subjective way, but it is interesting that their perception of the real world is similar to humans but not exactly the same.

However, the output of SketchRNN is not strange. Eck said:

"I don't want to say it's "very human," but its perception is more like that of pixel-generated images."

This is the core insight of the Magenta team led by Eck. "Mankind understands the world differently than pixels, but instead develops abstract concepts to replace what we see." Eck and Ha wrote in their thesis as saying, "Since we were children, we have developed through painting. The ability to communicate what we see to others.â€

So, if humans can do this, Google believes that machines can do the same. Last year, Google's CEO Sundar Pichai announced the future development strategy of "AI First." For the company, AI is a natural extension of its original mission, "organizing the world's information to make it universal and useful." So, Google is trying to use AI to organize information so that people can access and use this information. The Magenta project is exactly one of Google’s attempts under this vision.

Machine learning is a common method used by Google in recent years. A specific machine learning method is to use a neural network modeled roughly on the human brain connection system. Multilevel neural networks are particularly effective in solving difficult problems, especially in translation and image recognition. Google has rebuilt many core services on these new architectures.

Take Google Translate as an example. Although it is a complex system that has been built for more than 10 years, Google finally spent 9 months to complete the reconstruction of the system through deep learning. So in this case, the use and types of neural networks have exploded in recent years.

Based on the neural network foundation, SketchRNN uses a generation recursive neural network. According to Google's paper, this type of neural network can generate sketches of simple objects. The purpose is to train a machine that can draw and summarize abstract concepts, and its way of thinking is similar to humans.

The easiest way to describe training is to use it as a coding method. After entering data (sketch), the neural network attempts to summarize some general rules in the processed data. These general rules are models of data that are stored in mathematics that describe the neuronal characteristics of the network.

This process is called latent space or "Z" (zed). It can learn what is learned throughout the entire training process, such as a pig, a truck, or a yoga posture, where it is stored, and "Z" samples them again.

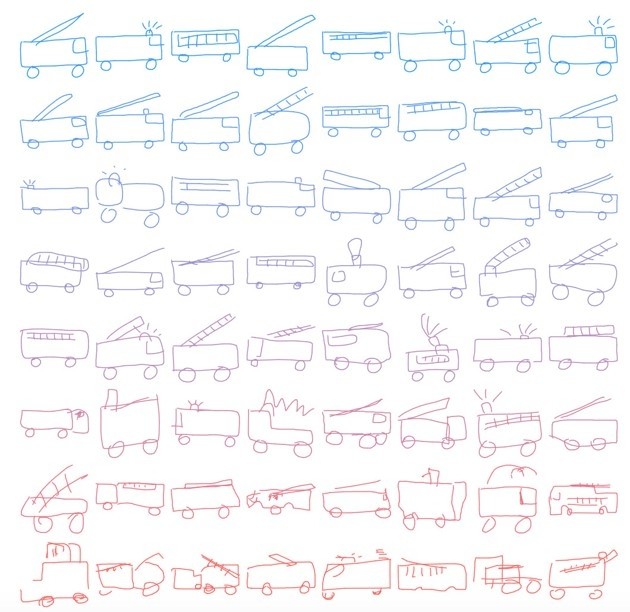

So, what can SketchRNN learn? The following is an example of a neural network that is trained by fire engines to generate new fire engines. In this model, there is a "temperature" variable, and researchers can up- or down-regulate the randomness of the output. In the following images, a bluish color indicates a "low temperature", and a reddish color indicates a high "temperature."

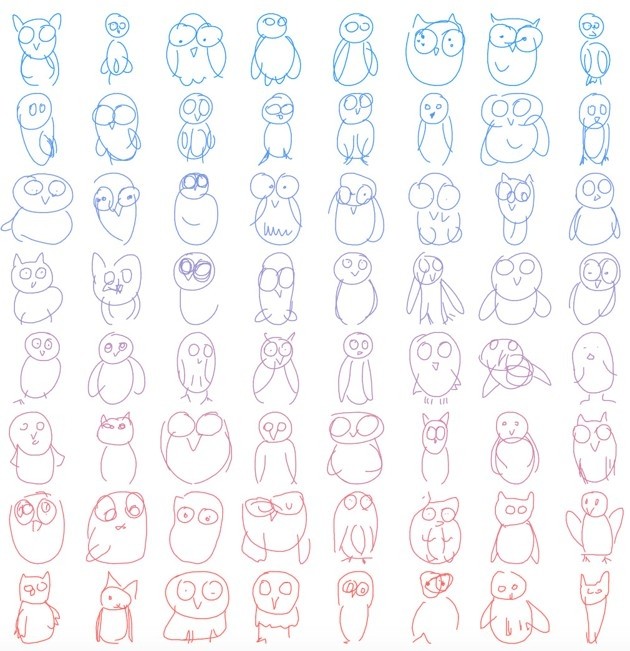

Or you will want to see the owl more:

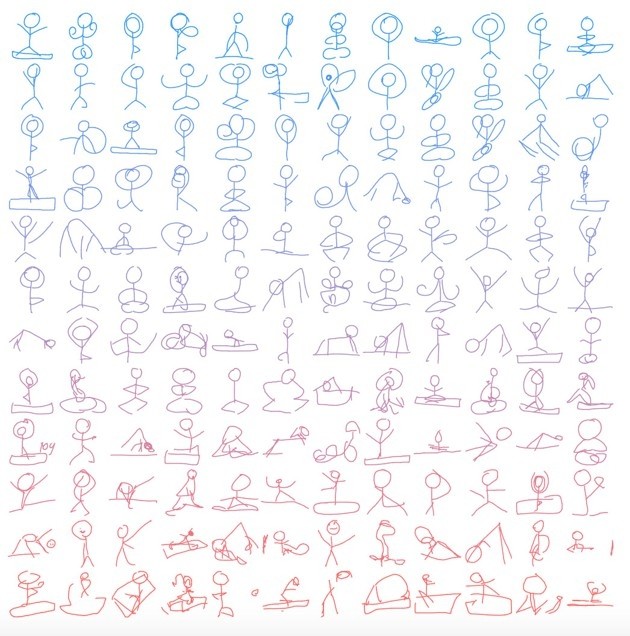

Or the best example - yoga pose:

From the above cases, the output of SketchRNN has been very similar to human style, but they are not drawn by humans themselves. Or rather, they are reconstructing the way humans might be painting something. Of course, some of them were reconstructed very well, while others were not.

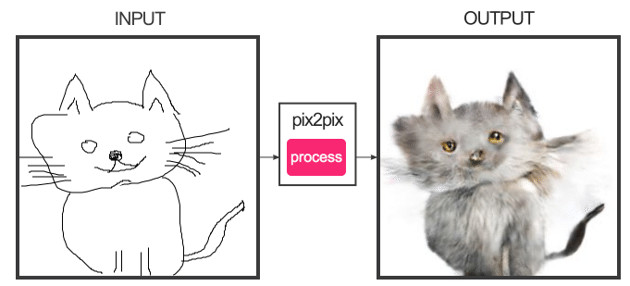

At the same time, SketchRNN can accept input in the form of artificial images. When humans feed something, SketchRNN tries to figure it out. The following is a model that is undergoing data training for cats. What changes will you find in the images of the three cats?

As can be seen from the above figure, from the left to the right output, the third eye is removed. Because the model knows, cats have triangular ears, beards, round faces, and only two eyes.

Of course, the model does not know what the ears are or what the face is. It knows nothing about these sketches of the world. But it does know how humans depict cats, pigs, or sailboats.

Eck said, “When we start to generate a sailing chart, the model will enter hundreds of other models of sailing boats, which may come from the map. This makes sense for us because the model has been learned from all these training data. An ideal sailing boat was created. "

Train a network that can draw raindrops, then enter a sketch of the cloud, which will do the following:

Raindrops fall from the clouds in the input model. That's because many people draw clouds when they paint raindrops, and then paint the falling rain. So if the neural network sees a cloud, it will let the rain fall at the bottom of the shape. (Interestingly, if you paint rain first, the model will not produce clouds.)

This is an interesting job, but what is the significance of such a project in the reverse engineering of human thinking?

Eck is interested in the description because they are rich in content but contain little information. Draw a smile with only a few strokes, even a collection of pixels, but any person over the age of 3 can recognize it as a face, even if it is a happy or sad face. Eck thinks this is a compression, a code that SketchRNN can decode, and even re-encode it.

OpenAI researcher Andrej Karpathy is also very interested in SketchRNN's work. OpenAI is also a center of artificial intelligence research. However, he also pointed out that this project must satisfy many preconditions, which means that it will not help the enterprise to develop artificial intelligence.

"The development model we develop is usually as unrelated to the details of the data set as possible, and whatever you enter, it should be usable, including images, audio, text, or anything else. Except for images, the rest is not made up of strokes. ."

Eck and Ha are being developed to be closer to the AI ​​that can play chess than to an AI that can play any game. So for Karpathy, their current scope of work seems limited.

But there are some reasons to believe that line drawings are the foundation of human thinking. Google employees are not the only researchers attracted by the power of sketches. As early as 2012, James Hays of the Georgia Institute of Technology collaborated with Mathias Eitz and Marc Alexa of the Technical University of Munich to create a sketch data set and a machine learning system for recognizing them.

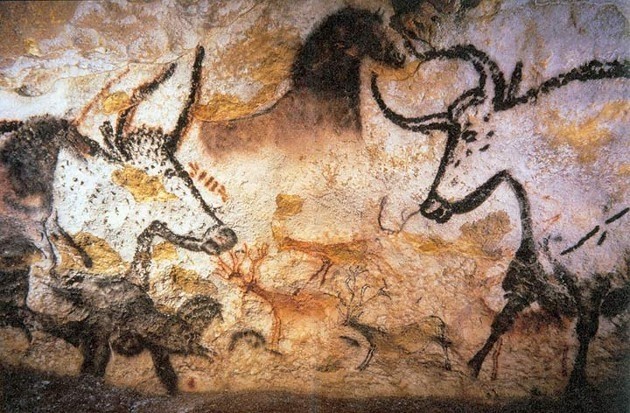

For them, sketches are a form of "universal communication," and all people with standard cognitive functions can do this. They believe that since the prehistoric era, human beings have described the world by means of sketch rock paintings or cave paintings. This kind of hieroglyphs has been used for hundreds of thousands of years before the emergence of language. Today, the ability to draw and recognize sketches is already basic.

Dirk Walther, a neuroscientist at the University of Toronto, pointed out in a paper that simple abstract sketches activate our brains in a similar way to real stimuli. Walther's hypothesis is that the line drawings represent the nature of our natural world, because on the basis of pixels, some cats' lines do not look like a cat.

A sketch may be a way to help us grasp the conceptual level of a storage object, which is what we call "essence." In other words, they may tell us how humans began to think when our ancestors were modernized over the past 100,000 years. Sketches and cave paintings may depict how we move from everyday experience to abstraction.

Most modern life has this kind of transformation: language, money, mathematics, and calculation itself. Therefore, it is reasonable to assume that the draft plan can play an important role in creating important artificial intelligence.

Of course, for humans, the sketch is a depiction of real things. We can easily understand the relationship between abstract lines and actual things. This concept is of great significance to us.

For SketchRNN, the sketch is a sequence of strokes, and the shape is formed by time. The task of the machine is to extract the essence of what is depicted in the drawings and try to use them to understand the world.

The SketchRNN team is exploring in many ways. They may establish a system that tries to get better results through human feedback. They can use a variety of sketches to train the model. Perhaps they will find a way to see if their models can be generalized to realistic images. But they themselves admit that SketchRNN is the first step and there are many things to learn.

The history of human art is not comparable to the technological age.

For Eck, they are more about understanding the basis of how humans think. In his view, a core part of art is that it represents basic humanity. To understand deep learning, it is also necessary to understand the basic mechanisms of human life, namely how we see the world, how to talk, how to recognize faces, how to compose words into stories, and how to arrange music. It does not appear to be related to any one specific human being, but it represents an abstract human being.

Finally, if you want to better understand the AI ​​system of SketchRNN, Lei Feng Net offers a small welfare → _ → can poke this link to get Google official Paper.

Via theatlantic

燑br>

燑br>

• High PRO MAX body, 304 Stainless steel + PETG mouthpiece.

Maskking High Pro,Maskking E-Cigarette,Maskking High Pro Vape

Nanning Goodman Technology Co.,Ltd , https://www.goodmentech.com