Understanding a well-functioning system is the best preparation for dealing with the inevitable failures.

The oldest joke about open source software is: "Code is self-documenting." Experience has shown that reading source code is like listening to weather forecasts: wise people still go out to see the weather outside. This article describes how to use the debugging tools to observe and analyze the startup of a Linux system. Analyze a functional system startup process to help users and developers cope with unavoidable failures.

In some ways, the startup process is very simple. The kernel starts up in a single-threaded and synchronized state on a single core, which seems understandable. But how does the kernel itself start? What functions does initrd(initial ramdisk) and bootloader have? Also, why is the LED on the Ethernet port always lit?

Please read on to find the answer. Code to introduce demos and exercises is also available on GitHub.

Start of startup: OFF state

Wake-on-LAN wakeup

The OFF status indicates that the system is not powered, is it correct? The surface is simple, but it is not. For example, if the system has Wake-on-LAN (WOL) enabled, the Ethernet indicator will illuminate. Check if this is the case with the following command:

# sudo ethtool

among them

# sudo ethtool -s

The processor that responds to the magic packet may be part of the network interface or the baseboard management controller (BMC).

Intel Management Engine, Platform Controller Unit and Minix

The BMC is not the only microcontroller (MCU) that is still listening when the system is shut down. The x86_64 system also includes an Intel Management Engine (IME) software suite for remote management systems. From the server to the laptop, a variety of devices include this technology, which enables features such as KVM remote control and Intel feature licensing services. According to Intel's own detection tools, there are unpatched vulnerabilities in the IME. The bad news is that it is difficult to disable IME. Trammell Hudson launched a me_cleaner project that removes some of the relatively poor IME components, such as the embedded Web server, but can also affect the system on which it runs.

IME Firmware and System Management Mode The System Management Mode (SMM) software is based on the Minix operating system and runs on a separate platform controller unit, the Platform Controller Hub (LCTT), rather than the main CPU. The SMM then launches the Universal Extensible Firmware Interface (UEFI) software located on the host processor, which has been mentioned several times. Google's Coreboot team has launched an ambitious non-extended reduced firmware Non-Extensible Reduced Firmware (NERF) project that aims to replace not only UEFI but also earlier Linux user space components such as systemd. While we are waiting for these new results, Linux users can now purchase IME-enabled laptops from Purism, System76 or Dell, and an ARM 64-bit processor laptop is still worth looking forward to.

Boot program

In addition to launching spyware with constant problems, what is the function of the early boot firmware? The role of the bootloader is to provide the newly powered processor with the resources needed for a general-purpose operating system such as Linux. At boot time, there is no virtual memory, and there is no DRAM before the controller starts. The bootloader then powers up and scans the bus and interface to locate the kernel image and the root file system. Common bootloaders such as U-Boot and GRUB support interfaces such as USB, PCI, and NFS, as well as more embedded specialized devices such as NOR flash and NAND flash. The bootloader also interacts with hardware security devices such as the Trusted Platform Module (TPM) to establish a chain of trust at the very beginning of the boot.

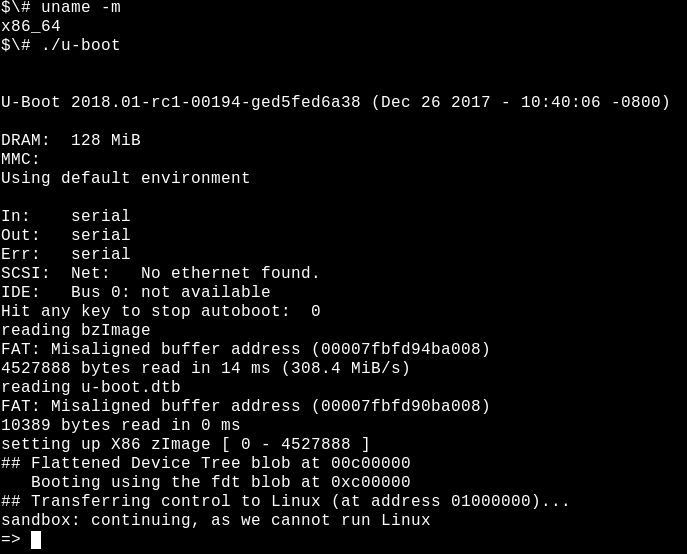

Run the U-boot bootloader in the sandbox on the build host.

U-Boot, the widely used open source bootloader, is supported on systems including Raspberry Pi, Nintendo, car motherboards and Chromebooks. It doesn't have a system log, and there isn't even any console output when something goes wrong. To facilitate debugging, the U-Boot team provides a sandbox to test patches on build hosts and even nighttime continuous integration (CI) systems. If you have a generic development tool such as Git and GNU Compiler Collection (GCC) installed on your system, using a U-Boot sandbox is relatively straightforward:

# git clone git://git.denx.de/u-boot; cd u-boot

# make ARCH=sandbox defconfig

# make; ./u-boot

=> printenv

=> help

Running U-Boot on x86_64 allows you to test some tricky features such as repartitioning of analog storage devices, TPM-based key operations, and hot swapping of USB devices. The U-Boot sandbox can even be single-stepped under the GDB debugger. Using a sandbox for development is 10 times faster than testing a bootloader onto a board, and you can use Ctrl + C to recover a “brick†sandbox.

Boot kernel

Configuring the boot kernel

After the bootstrap task completes the task, it will jump to the kernel code that has been loaded into main memory and begin execution, passing any command line options specified by the user. What kind of program is the kernel? You can see that it is a "bzImage" with the command file /boot/vmlinuz, which means a large compressed image. The Linux source tree contains a tool that extracts this file -- extract-vmlinux:

# scripts/extract-vmlinux /boot/vmlinuz-$(uname -r) > vmlinux

# file vmlinux

Vmlinux: ELF64-bit LSB executable, x86-64, version1(SYSV), statically

Linked, stripped

The kernel is a binary file of Executable and Linking Format (ELF), just like the userspace program for Linux. This means we can check it using commands in the binutils package, such as readelf. Compare the output, for example:

# readelf -S /bin/date

# readelf -S vmlinux

The sections in these two binaries are roughly the same.

So the kernel must be started like any other Linux ELF file, but how is the userspace program started? In the main() function? Not exact.

Before the main() function runs, the program needs an execution context, including stack memory and file descriptors for stdio, stdout, and stderr. Userspace programs get these resources from the standard library (most Linux systems are using "glibc"). Refer to the following output:

# file /bin/date

/bin/date: ELF64-bit LSB shared object, x86-64, version1(SYSV),dynamically

Linked,interpreter /lib64/ld-linux-x86-64.so.2,forGNU/Linux2.6.32,

BuildID[sha1]=14e8563676febeb06d701dbee35d225c5a8e565a,

Stripped

ELF binaries have an interpreter, just like Bash and Python scripts, but the interpreter doesn't need to be specified with #! as a script, because ELF is the native format of Linux. The ELF interpreter configures a binary file with the required resources by calling the _start() function, which can be found in the glibc source package and can be viewed in GDB. The kernel obviously has no interpreter and must be self-configured. How does this work?

The answer to the startup of the GDB check kernel gives the answer. First install the kernel debug package, the kernel contains an unstripped unstripped vmlinux, such as apt-get install linux-image-amd64-dbg, or compile and install your own kernel from the source code, you can refer to the Debian Kernel Handbook instruction. Gdb vmlinux followed by info files to display the ELF segment init.text. Use l *(address) in init.text to list the beginning of the program execution, where address is the beginning of the hexadecimal init.text. With GDB, you can see that the x86_64 kernel starts from the kernel file arch/x86/kernel/head_64.S. In this file we find the assembly function start_cpu0() and an explicit code display created before calling the x86_64 start_kernel() function. Stacked and unzipped zImage. The ARM 32-bit kernel also has a similar file arch/arm/kernel/head.S. Start_kernel() is not specific to the architecture, so this function resides in the kernel's init/main.c. Start_kernel() can be said to be the real main() function of Linux.

From start_kernel() to PID 1

Kernel hardware inventory: device tree and ACPI table

At boot time, the kernel requires hardware information, not just the type of processor that has been compiled. The instructions in the code are augmented by separately stored configuration data. There are two main methods of data storage: device tree device-tree and advanced configuration and power interface (ACPI) tables. The kernel reads these files to understand the hardware that needs to be run each time it starts.

For embedded devices, the device tree is a list of installed hardware. The device tree is just a file that is compiled with the kernel source code and is usually located in the /boot directory just like vmlinux. To view the contents of the device tree on an ARM device, simply execute the strings command in the binutils package for files whose names match /boot/*.dtb, where dtb refers to the device tree binary device-tree binary. Obviously, you can modify the device tree by simply editing the file that makes up its class JSON and re-running the special dtc compiler provided with the kernel source. Although the device tree is a static file whose file path is usually passed to the kernel by the command line bootloader, in recent years a device tree override has been added that allows the kernel to dynamically load hot-plugged add-on devices after booting.

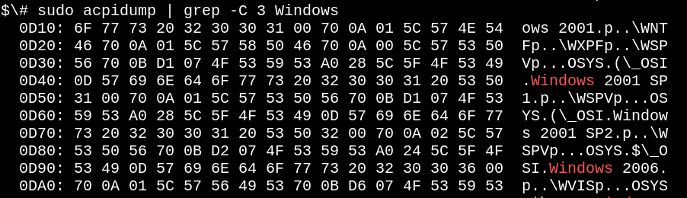

The x86 family and many enterprise-class ARM64 devices use the ACPI mechanism. Unlike the device tree, ACPI information is stored in the /sys/firmware/acpi/tables virtual file system created by the kernel at boot time by accessing the onboard ROM. An easy way to read an ACPI table is to use the acpidump command in the acpica-tools package. E.g:

The ACPI tables for Lenovo laptops are all set for Windows 2001.

Yes, your Linux system is ready for Windows 2001. Do you want to consider installing it? Unlike device trees, ACPI has methods and data, and device trees are more of a hardware description language. The ACPI method is still active after startup. For example, running the acpi_listen command (in the apcid package), then opening and closing the notebook cover will reveal that the ACPI function is always running. Temporarily and dynamically overwriting the ACPI table is possible, and permanently changing it requires interacting with the BIOS menu or refreshing the ROM at boot time. If you are experiencing so much trouble, maybe you should install coreboot, which is a replacement for open source firmware.

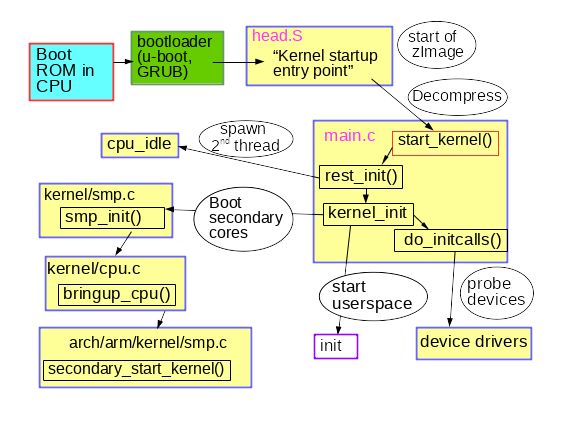

From start_kernel() to user space

The code in init/main.c is readable, and interestingly, it still uses the original copyright of Linus Torvalds from 1991-1992. Run dmesg | head on a newly booted system whose output is mainly from this file. The first CPU is registered to the system, the global data structure is initialized, and the scheduler, interrupt handler (IRQ), timer, and console are started one by one in strict order. All timestamps are zero before the timekeeping_init() function runs. This part of the kernel initialization is synchronous, which means that the execution only happens in one thread, and no function will be executed until the last one completes and returns. Therefore, even between the two systems, the output of dmesg is completely repeatable as long as they have the same device tree or ACPI table. Linux behaves like an RTOS (real-time operating system) running on an MCU, such as QNX or VxWorks. This situation persists in the function rest_init() , which is called by start_kernel() when it terminates.

Early kernel boot process.

The function rest_init() spawns a new process to run kernel_init() and calls do_initcalls(). Users can monitor initcalls by appending initcall_debug to the kernel command line, which produces a dmesg entry each time the initcall function is run. Initcalls will go through seven consecutive levels: early, core, postcore, arch, subsys, fs, device, and late. The most visible part of initcalls is the detection and setup of all processor peripherals: bus, network, storage, display, etc., while loading its kernel modules. Rest_init() also spawns a second thread on the boot processor, which first runs cpu_idle() and then waits for the scheduler to allocate work.

Kernel_init() can also set up a symmetric multiprocessing (SMP) structure. In newer kernels, if the words "Bringing up secondary CPUs..." appear in the output of dmesg, the system uses SMP. SMP is done by "hot plugging" the CPU, which means it uses a state machine to manage its lifecycle, which is conceptually similar to a hot-swappable USB stick. The kernel's power management system often takes a core core offline and wakes it up as needed to repeatedly call the same segment of CPU hot-plug code on a non-busy machine. The BCC tool that observes that the power management system calls the CPU hot plug code is called offcputime.py.

Note that the code in init/main.c has almost completed when smp_init() is run: the boot processor has completed most of the one-time initialization operations, and the other cores do not need to be duplicated. Nonetheless, threads across the CPU are still generated on each core to manage interrupts (IRQ), work queues, timers, and power events for each core. For example, the ps -o psr command lets you view the softirqs and workqueues of threads on each CPU of the service.

# ps -o pid,psr,comm $(pgrep ksoftirqd)

PID PSR COMMAND

7 0ksoftirqd/0

16 1ksoftirqd/1

22 2ksoftirqd/2

28 3ksoftirqd/3

# ps -o pid,psr,comm $(pgrep kworker)

PID PSR COMMAND

4 0kworker/0:0H

18 1kworker/1:0H

24 2kworker/2:0H

30 3kworker/3:0H

[...]

Among them, the PSR field stands for "processor processor". Each core must also have its own timer and cpuhp hot plug handler.

So how is user space started? At the end, kernel_init() looks for an initrd that can execute the init process on its behalf. If not found, the kernel executes init itself directly. So why do you need initrd?

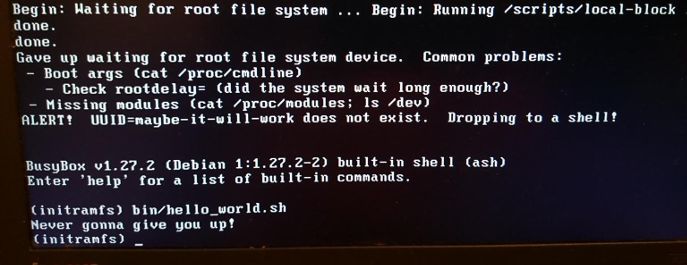

Early user space: Who rules to use initrd?

In addition to the device tree, another file path that can be supplied to the kernel at startup is the path to initrd. The initrd is usually located in the /boot directory, the same as the bzImage file vmlinuz on x86 systems, or the same as the uImage and device trees in the ARM system. The contents of initrd can be listed using the lsinitramfs tool in the initramfs-tools-core package. The release version of the initrd scheme includes the minimized /bin, /sbin, and /etc directories, as well as kernel modules, as well as some files in /scripts. All of this seems familiar, because initrd is roughly a simple minimal Linux root file system. It seems similar, but it is not, because all executable files in the /bin and /sbin directories on the virtual ramdisk are almost all symbolic links to the BusyBox binary, resulting in a smaller /bin and /sbin directory than glibc. 10 times.

If all you have to do is load some modules and then start init on a normal root filesystem, why create an initrd? Think of an encrypted root filesystem. Decryption may depend on loading a kernel module located in the root filesystem /lib/modules and of course in initrd. Encryption modules may be statically compiled into the kernel instead of being loaded from a file, but there are several reasons why you don't want to do so. For example, static compilation of a kernel with a module may make it too large to fit into storage space, or static compilation may violate software license terms. As expected, storage, networking, and human input device (HID) drivers may also exist in initrd. Initrd basically contains any non-kernel code necessary to mount the root file system. Initrd is also where users store custom ACPI table code.

The rescue mode shell and the custom initrd are still very interesting.

Initrd is also useful for testing file systems and data storage devices. Store these test tools in initrd and run tests from memory instead of running from the object under test.

Finally, when init starts running, the system starts! Since the second processor is now running, the machine has become an asynchronous, preemptible, unpredictable, and high-performance creature that we know and love. Indeed, ps -o pid, psr, comm -p 1 make it easy to show that user space init processes are no longer running on the boot processor.

to sum up

The Linux boot process may sound daunting, even for the number of software on simple embedded devices. But from another perspective, the startup process is quite simple, because there are no complicated functions such as preemption, RCU, and race conditions in the startup. Focusing only on the kernel and PID 1 ignores the large amount of preparation that the bootstrap and the secondary processor perform to run the kernel. Although the kernel is unique in Linux programs, its structure can be understood by some tools that check ELF files. Learning a normal startup process can help the operation and maintenance personnel handle the startup failure.

Solar Home Lighting System Kit

SHENZHEN CHONDEKUAI TECHNOLOGY CO.LTD , https://www.siheyidz.com